Everyone of us attended at least once in our lives a cocktail party. At such parties, it’s impractical for all guests to join a single conversation. So instead, we break into groups of twos, threes and fours to discuss

whatever it is we end up discussing.

The result is dozens of simultaneous conversations – and a great deal of background noise. And yet, just about every guest will effortlessly tune out every single conversation

bar the one they’re actually involved in. It’s a phenomenon known, tellingly, as the cocktail party effect.

The situation occurs oftenly also inside vehicles when mutiple passengers engage into conversations while the driver is supposed to issue voice commands that are supposed to be understood by the vehicle.

The Glas.AI (R) VoiceTuner (R) library employs a set of deep neural networks which de-noise a multiple-voice signal then split each voice on a separate audio channel.

Only the channel which contains a voice speaking the predefined trigger word is passed through and outputted outputted for the ASR step.

Available on Android and QNX. Other operating system builds can be provided upon request.

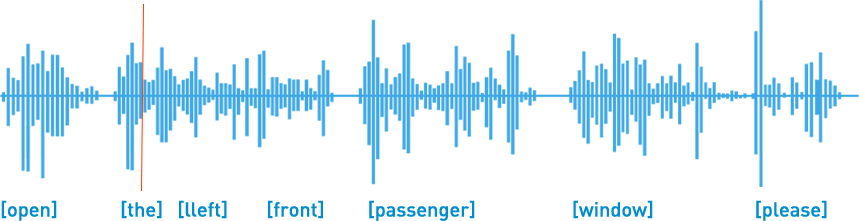

VoiceTuner (R) samples:

VoiceTuner (R) Neural Monaural Denoising before and after:

VoiceTuner (R) Monaural Source Separation, before and after:

Our speech processing package includes: Age and Gender

Identification, Neural Monaural Source Separation,

Neural Monaural Noise Filtering, Low-power Trigger

Word, On-Device ASR, On-Device Neural NLP.